×

![]()

Karma Installation

Install Karma Client on Windows 10 an run it from Command Line

D:\dev\myTestProjects\dobby-the-companion> npm install -g karma-cli

C:\Users\Helmut\AppData\Roaming\npm\karma -> C:\Users\Helmut\AppData\Roaming\npm\node_modules\karma-cli\bin\karma

+ karma-cli@1.0.1

updated 1 package in 0.421s

D:\dev\myTestProjects\dobby-the-companion> karma start

08 08 2018 16:03:24.227:WARN [karma]: No captured browser, open http://localhost:9876/

08 08 2018 16:03:24.232:WARN [karma]: Port 9876 in use

08 08 2018 16:03:24.233:INFO [karma]: Karma v1.7.1 server started at http://0.0.0.0:9877/

Run all Karma test

D:\dev\myTestProjects\dobby-the-companion> ng test

10% building modules 1/1 modules 0 active(node:25148) DeprecationWarning: Tapable.plugin is deprecated. Use new API on `.hooks` instead

10 08 2018 14:07:37.807:WARN [karma]: No captured browser, open http://localhost:9876/

10 08 2018 14:07:37.813:INFO [karma]: Karma v1.7.1 server started at http://0.0.0.0:9876/

10 08 2018 14:07:37.813:INFO [launcher]: Launching browser Chrome with unlimited concurrency

10 08 2018 14:07:37.817:INFO [launcher]: Starting browser Chrome 10 08 2018 14:07:43.581:WARN [karma]: No captured browser, open http://localhost:9876/

Missing Error Messages with Angular / Karma testing – add source-map=false parameter

D:\dev\myTestProjects\dobby-the-companion> ng test --source-map=false

10% building modules 1/1 modules 0 active(node:6332) DeprecationWarning: Tapable.plugin is deprecated. Use new API on `.hooks` instead

10 08 2018 14:24:47.487:WARN [karma]: No captured browser, open http://localhost:9876/

10 08 2018 14:24:47.492:INFO [karma]: Karma v1.7.1 server started at http://0.0.0.0:9876/

10 08 2018 14:24:47.492:INFO [launcher]: Launching browser Chrome with unlimited concurrency

10 08 2018 14:24:47.504:INFO [launcher]: Starting browser Chrome 10 08 2018 14:24:50.370:WARN [karma]: No captured browser, open http://localhost:9876/

10 08 2018 14:24:50.748:INFO [Chrome 67.0.3396 (Windows 10.0.0)]: Connected on socket Ml0r-gsA3JNw1F0sAAAA with id 52984303

Run ONLY a single Karma test

Change in your IDE :

describe('LoginComponent', () => {

-> fdescribe('LoginComponent', () => {

Reference

Using RouterTestingModule to test Angular Router Object

Code to be tested

import {Router} from '@angular/router';

export class myComponent {

constructor( private router: Router ) { }

triggerMessageAction(m: Message) {

if (m.messageClass === 'internal') {

this.router.navigate([m.messageLink]);

}

}

Karma/Jasmine Test Code

import {RouterTestingModule} from '@angular/router/testing';

import {Router} from '@angular/router';

describe('WarningDialogComponent', () => {

beforeEach(async(() => {

TestBed.configureTestingModule({

...

imports: [ RouterTestingModule ],

}).compileComponents();

}));

beforeEach(inject([MessagesService], (service: MessagesService) => {

...

}));

it('should close Warning Dialog after pressing on a Warning Action ', inject([MessagesService, Router],

(service: MessagesService, router: Router) => {

spyOn(component, 'triggerMessageAction').and.callThrough();

spyOn(router, 'navigate').and.returnValue(true);

expect(component.triggerMessageAction).toHaveBeenCalledWith(jasmine.objectContaining( {messageType: 'logout'}));

expect(router.navigate).toHaveBeenCalledWith('login');

}));

});

Potential Error

Error: : Expected a spy, but got Function.

Usage: expect().toHaveBeenCalledWith(...arguments)

Fix: Add spyOn for the methode you want to spy

spyOn(router, 'navigate').and.returnValue(true)

Using SpyOn to run UNIT-tests for Angular Router Calls

- spyOn() takes two parameters: the first parameter is the name of the object and the second parameter is the name of the method to be spied upon

- It replaces the spied method with a stub, and does not actually execute the real method

- The spyOn() function can however be called only on existing methods.

- A spy only exists in the describe or it block in which it is defined, and will be removed after each spec

Sample Code

it(`Fake Login with should set isAuthenticated Flag and finally call route: ects`,

async(inject([AuthenticationService, HttpTestingController],

(service: AuthenticationService, backend: HttpTestingController) => {

spyOn(service.router, 'navigate').and.returnValue(true);

service.login('Helmut', 'mySecret');

// Fake a HTTP response with a Bearer Token

// Ask the HTTP mock to return some fake data using the flush method:

backend.match({

method: 'POST'

})[0].flush({ Bearer: 'A Bearer Token: XXXXXXX' });

expect(service.router.navigate).toHaveBeenCalled();

expect(service.router.navigate).toHaveBeenCalledWith(['ects']);

expect(service.isAuthenticated()).toEqual(true);

})));

Reference

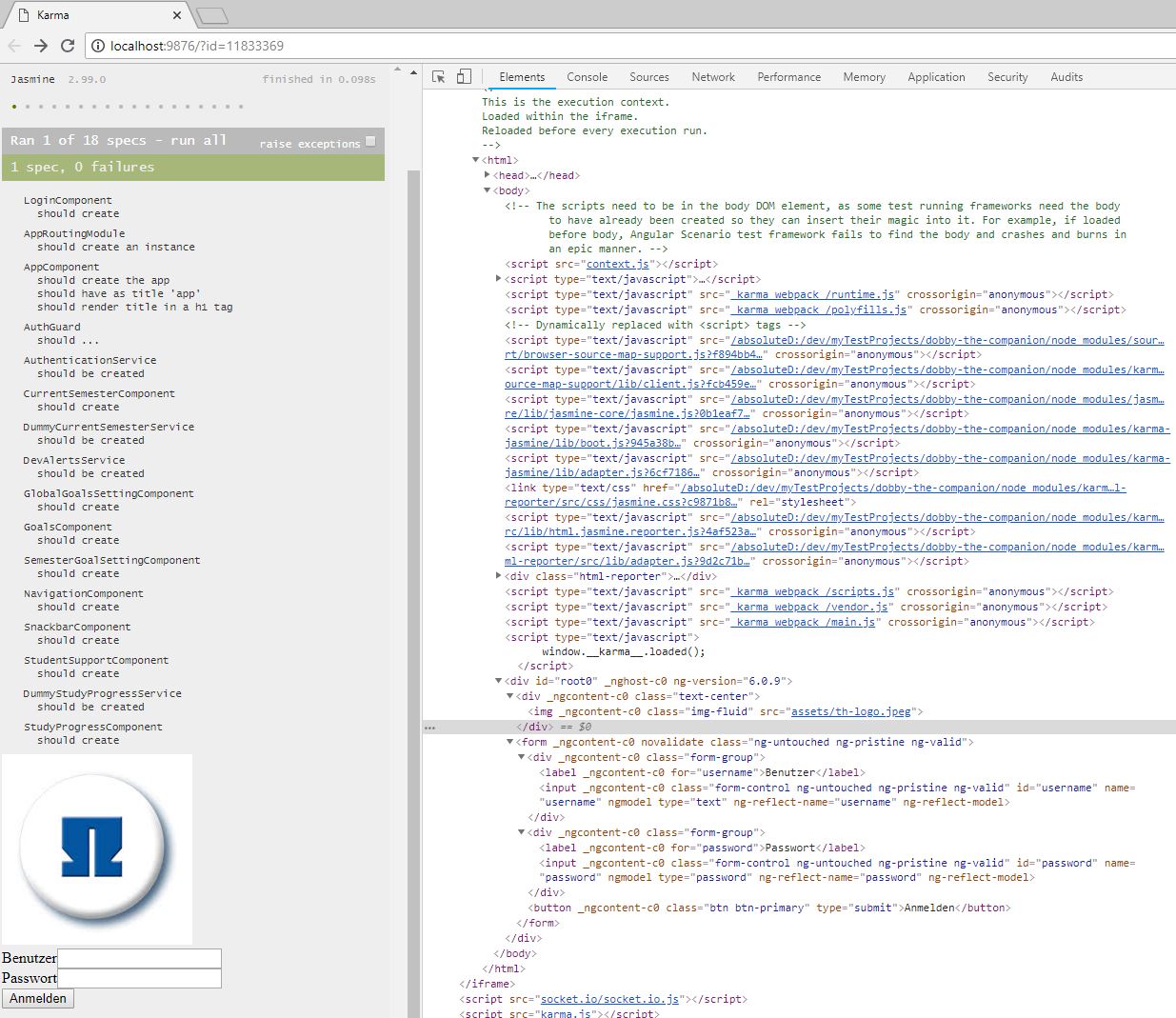

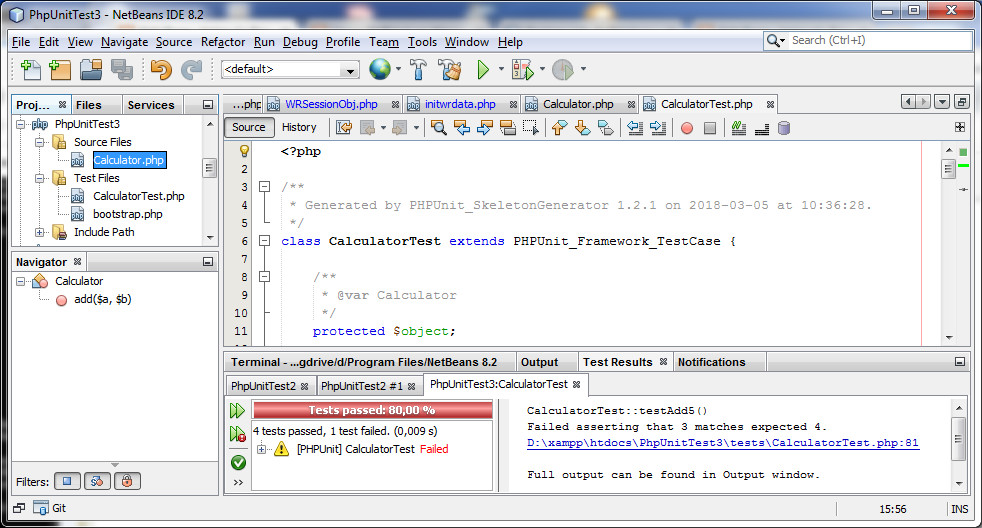

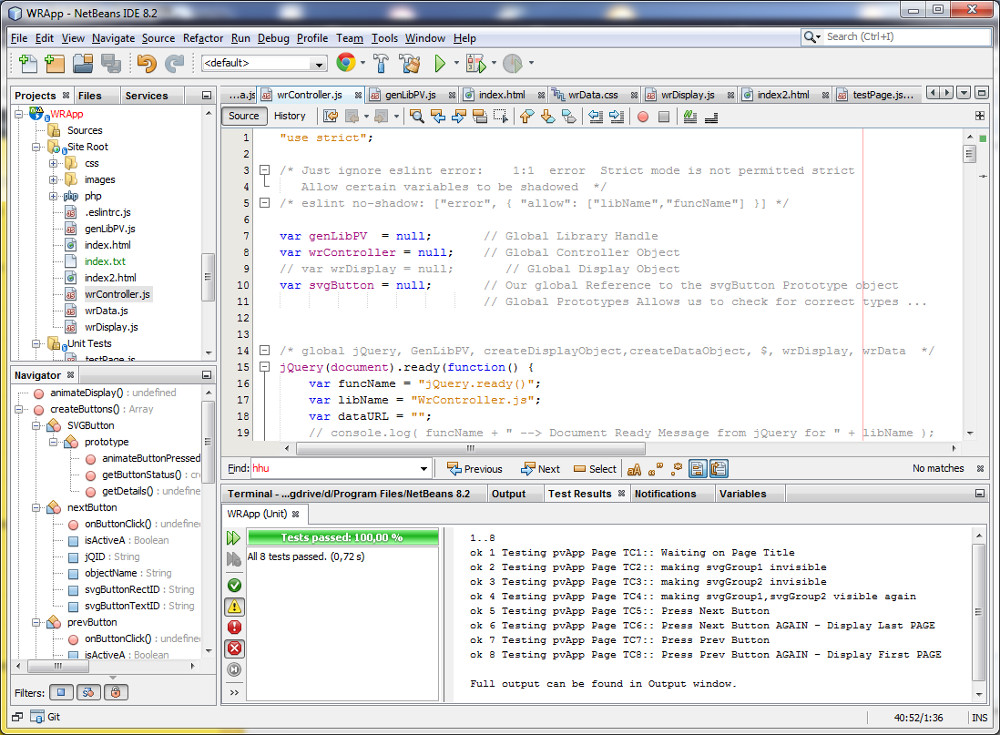

My Fist Karma Sample

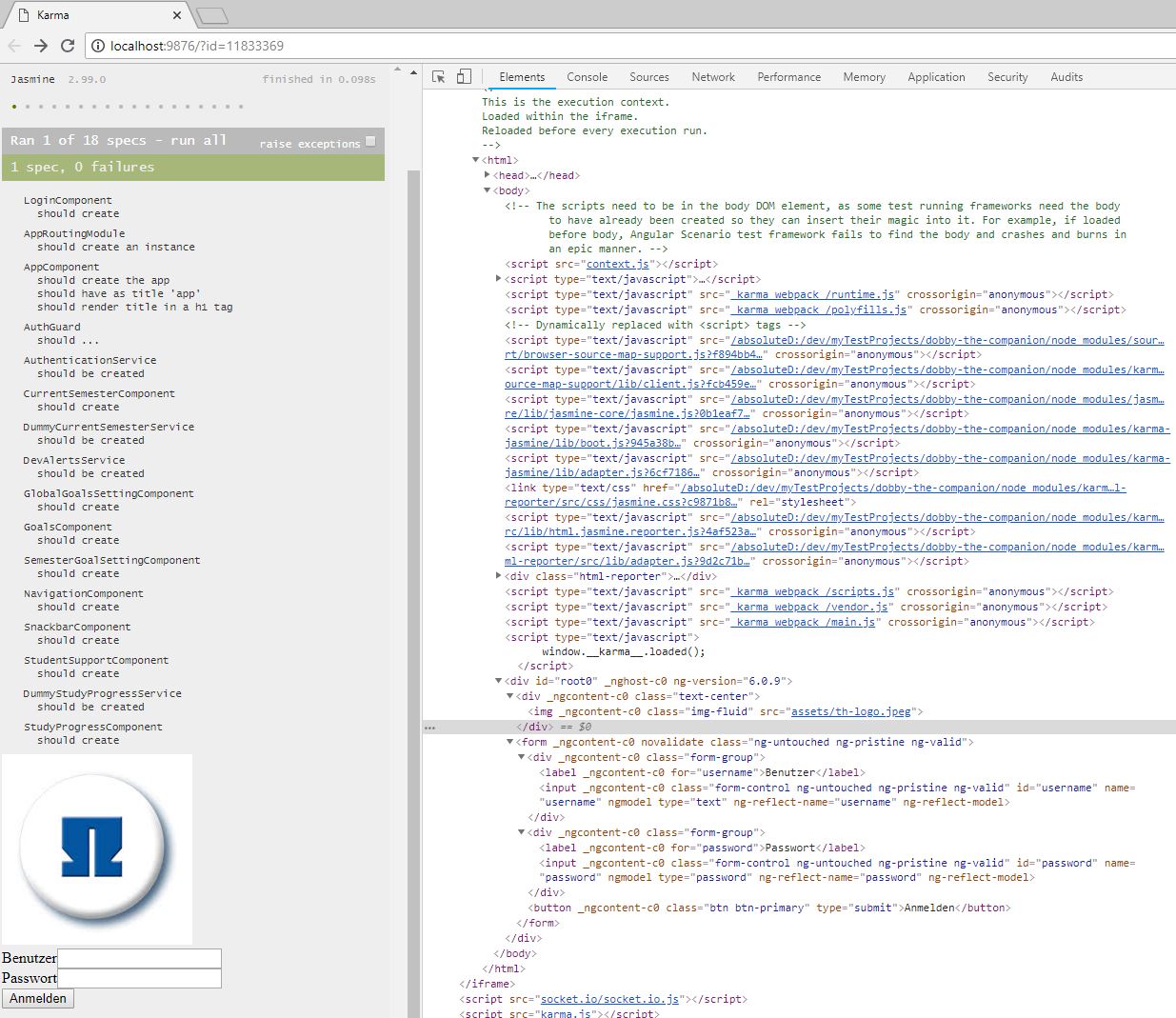

| Screenshot Chrome Browser after running Tests |

Details |

|

- Karma verison is v1.7.1

- Only 1 of our 18 test was running as we use fdescribe

- The test shows no error

- The Screen is devided into 2 divs

- Div with class html-report shows the test details

- Div with class root0 shows the angular HTML components

|

| Html Code |

Type Script TestCode: login.components.spec.ts |

|

</p>

<div class="text-center">

<h1>TestKarma</h1>

<p> <img class="img-fluid" src="assets/th-logo.jpeg">

</div>

<form (ngSubmit)="onLogin(f)" #f="ngForm">

<div class="form-group">

<label for="username">Benutzer</label><br />

<input type="text"

id="username"

name="username"

ngModel

class="form-control">

</div>

<div class="form-group">

<label for="password">Passwort</label><br />

<input type="password"

id="password"

name="password"

ngModel

class="form-control">

</div>

<p> <button class="btn btn-primary" type="submit">Anmelden</button><br />

</form

|

import { async, ComponentFixture, TestBed } from '@angular/core/testing';

import { LoginComponent } from './login.component';

import {FormsModule} from '@angular/forms';

import {RouterTestingModule} from '@angular/router/testing';

import {AuthenticationService} from '../authentication.service';

import {AuthGuard} from '../auth-guard.service';

fdescribe ('LoginComponent', () => {

let component: LoginComponent;

let fixture: ComponentFixture<LoginComponent>;

let h1: HTMLElement;

beforeEach(async(() => {

TestBed.configureTestingModule({

declarations: [ LoginComponent ],

imports: [FormsModule, RouterTestingModule ],

providers: [AuthenticationService]

})

.compileComponents();

}));

beforeEach(() => {

fixture = TestBed.createComponent(LoginComponent);

component = fixture.componentInstance;

h1 = fixture.nativeElement.querySelector('h1');

console.log(h1);

fixture.detectChanges();

});

it('should have <h1> with TestKarma', () => {

const loginElement: HTMLElement = fixture.nativeElement;

h1 = loginElement.querySelector('h1');

expect(h1.textContent).toEqual('TestKarma');

});

it('should be created', () => {

expect(component).toBeTruthy();

});

});

|

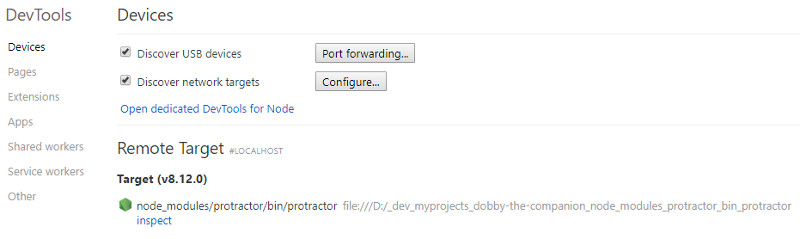

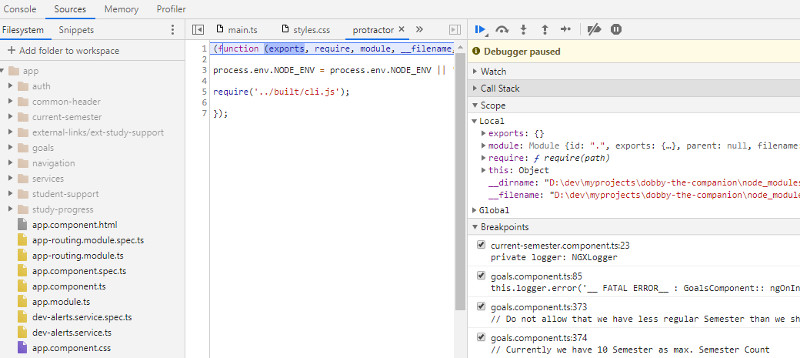

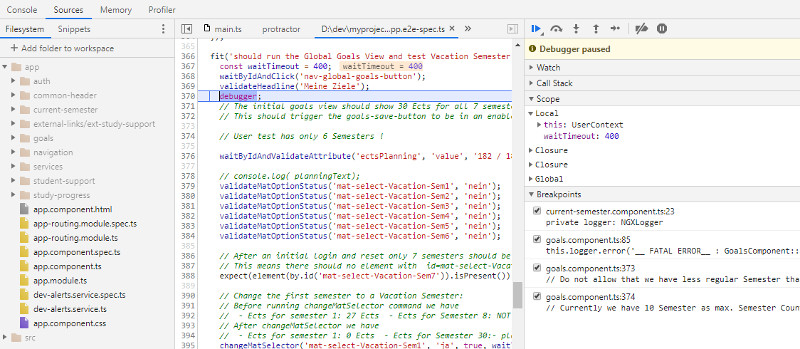

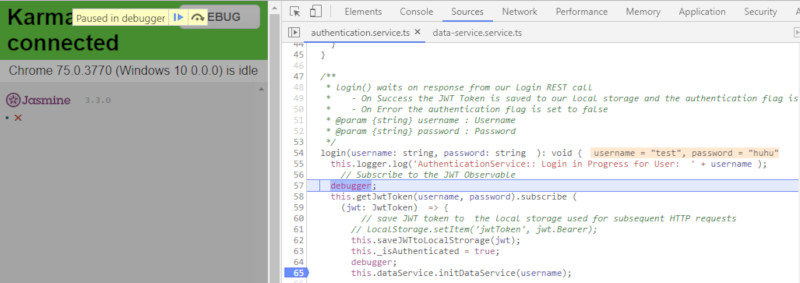

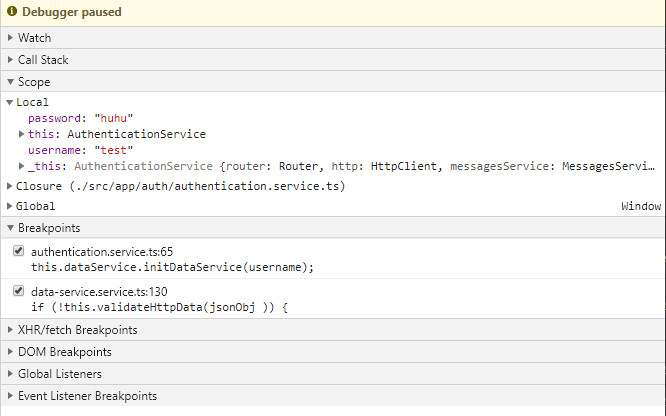

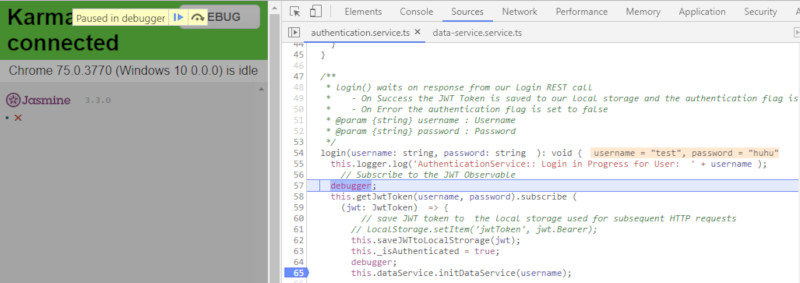

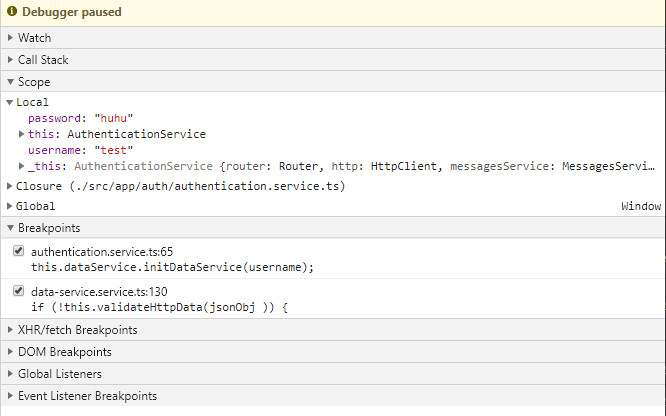

Debug Karma Test

- Add debugger keyword to your Java Script Code you want to debug

- Start Karma tests with : ng test

- Add Chrome Debugger Tools by pressing F12

- Reload your page – Javascript code should stop on the line with debugger statement

| Screenshot Chrome Browser after running Tests |

Details |

|

|

A more complexer Sample setting up a Karma Test Environment for Integration Tests

import { async, ComponentFixture, TestBed } from '@angular/core/testing';

import { CurrentSemesterComponent } from './current-semester.component';

import { CommonHeaderComponent } from '../common-header/common-header.component';

import { NavigationComponent } from '../navigation/navigation.component';

import { RouterTestingModule } from '@angular/router/testing';

import { MatIconModule } from '@angular/material';

import {MatButtonToggleModule} from '@angular/material/button-toggle';

import {AuthenticationService} from '../auth/authentication.service';

fdescribe('CurrentSemesterComponent', () => {

let component: CurrentSemesterComponent;

let fixture: ComponentFixture;

beforeEach(async(() => {

TestBed.configureTestingModule({

declarations: [ CurrentSemesterComponent,CommonHeaderComponent, NavigationComponent ],

providers: [AuthenticationService,],

imports: [RouterTestingModule, MatIconModule, MatButtonToggleModule ],

})

.compileComponents();

}));

beforeEach(() => {

fixture = TestBed.createComponent(CurrentSemesterComponent);

component = fixture.componentInstance;

fixture.detectChanges();

});

it('should create', () => {

expect(component).toBeTruthy();

});

});

Jasmine Test Order

- Currently (v2.x) Jasmine runs tests in the order they are defined

- However, there is a new (Oct 2015) option to run specs in a random order, which is still off by default

- According to the project owner, in Jasmine 3.x it will be converted to be the default.

Reference

Jasmine and Timeout

Reference

https://makandracards.com/makandra/32477-testing-settimeout-and-setinterval-with-jasmine

Error: router-outlet’ is not a known element:

Error Details

'router-outlet' is not a known element:

1. If 'router-outlet' is an Angular component, then verify that it is part of this module.

2. If 'router-outlet' is a Web Component then add 'CUSTOM_ELEMENTS_SCHEMA' to the '@NgModule.schemas' of this component to suppress this message.

"<app-navigation></app-navigation>

<div class="container">

[ERROR ->]<router-outlet></router-outlet>

</div>

"): ng:///DynamicTestModule/AppComponent.html@2:2

Fix – import RouterTestingModule

import {RouterTestingModule} from '@angular/router/testing'

describe('AppComponent', () => {

beforeEach(async(() => {

TestBed.configureTestingModule({

declarations: [

AppComponent

],

imports: [ RouterTestingModule ]

}).compileComponents();

}));

Reference

Error: app-navigation’ is not a known element:

Error Details

AppComponent should create the app

Failed: Template parse errors:

'app-navigation' is not a known element:

1. If 'app-navigation' is an Angular component, then verify that it is part of this module.

2. If 'app-navigation' is a Web Component then add 'CUSTOM_ELEMENTS_SCHEMA' to the '@NgModule.schemas' of this component to suppress this message. ("

<title>app</title>

[ERROR ->]<app-navigation></app-navigation>

Angular code

app.component.html

<app-navigation></app-navigation>

<div class="container">

<router-outlet></router-outlet>

</div>

navigation.component.ts

import {Component, OnInit} from '@angular/core';

import {AuthenticationService} from '../auth/authentication.service';

@Component({

selector: 'app-navigation',

templateUrl: './navigation.component.html',

styleUrls: ['./navigation.component.css']

})

...

Fix – Add a stubbing Object

import { TestBed, async } from '@angular/core/testing';

import { AppComponent } from './app.component';

import {RouterTestingModule} from '@angular/router/testing'

import {Component} from '@angular/core';

@Component({selector: 'app-navigation', template: ''})

class NavigationStubComponent {}

describe('AppComponent', () => {

beforeEach(async(() => {

TestBed.configureTestingModule({

declarations: [

AppComponent,

NavigationStubComponent

],

imports: [ RouterTestingModule ]

}).compileComponents();

})

Reference

Error Injecting a service

Error Datails: NullInjectorError: No provider for AuthenticationService!

NavigationComponent should create

Error: StaticInjectorError(DynamicTestModule)[NavigationComponent -> AuthenticationService]:

StaticInjectorError(Platform: core)[NavigationComponent -> AuthenticationService]:

NullInjectorError: No provider for AuthenticationService!

Fix add AuthenticationService to the provider Array

import {AuthenticationService} from '../auth/authentication.service';

describe('NavigationComponent', () => {

let component: NavigationComponent;

let fixture: ComponentFixture;

beforeEach(async(() => {

TestBed.configureTestingModule({

declarations: [ NavigationComponent ],

providers: [

MatSnackBar,

Overlay,

AuthenticationService,

SnackBarComponent,

DatePipe],

})

.compileComponents();

}));

Error: Failed: Template parse errors

Error Datails: There is no directive with “exportAs” set to “ngForm”

LoginComponent should create

Failed: Template parse errors:

There is no directive with "exportAs" set to "ngForm" ("

<form (ngSubmit)="onLogin(f)" [ERROR ->]#f="ngForm">

<div class="form-group">

<label for="username">Benutzer</label>

"): ng:///DynamicTestModule/LoginComponent.html@5:30

Fix: Add FormsModule Import

describe('LoginComponent', () => {

let component: LoginComponent;

let fixture: ComponentFixture;

beforeEach(async(() => {

TestBed.configureTestingModule({

declarations: [ LoginComponent ],

imports: [FormsModule ],

providers: [AuthenticationService]

})

Reference

Error: StaticInjectorError(DynamicTestModule)[AuthGuard -> Router]:

Error Datails: NullInjectorError: No provider for Router!

Error: StaticInjectorError(DynamicTestModule)[AuthGuard -> Router]:

StaticInjectorError(Platform: core)[AuthGuard -> Router]:

NullInjectorError: No provider for Router!

Error: StaticInjectorError(DynamicTestModule)[AuthGuard -> Router]:

StaticInjectorError(Platform: core)[AuthGuard -> Router]:

NullInjectorError: No provider for Router

Fix: Add RouterTesting Module

import { TestBed, async, inject } from '@angular/core/testing';

import { AuthGuard } from './auth-guard.service';

import {AuthenticationService} from '../auth/authentication.service';

import {RouterTestingModule} from '@angular/router/testing';

describe('AuthGuard', () => {

beforeEach(() => {

TestBed.configureTestingModule({

providers: [AuthGuard,

AuthenticationService,

],

imports: [

RouterTestingModule

],

});

});

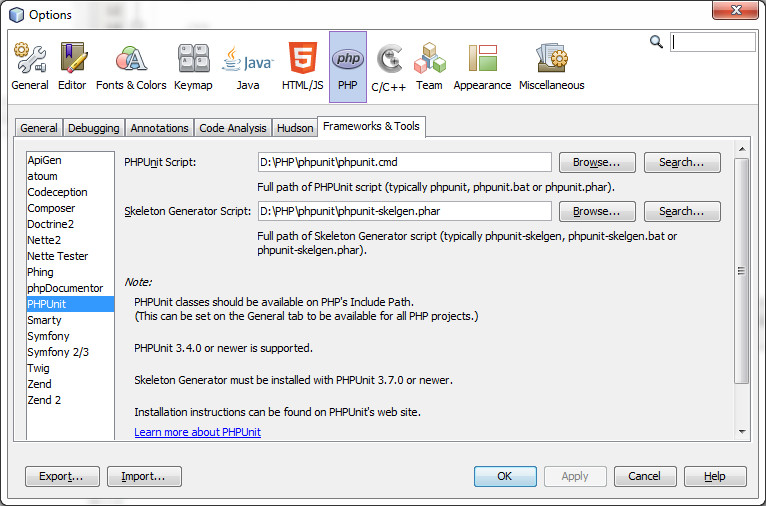

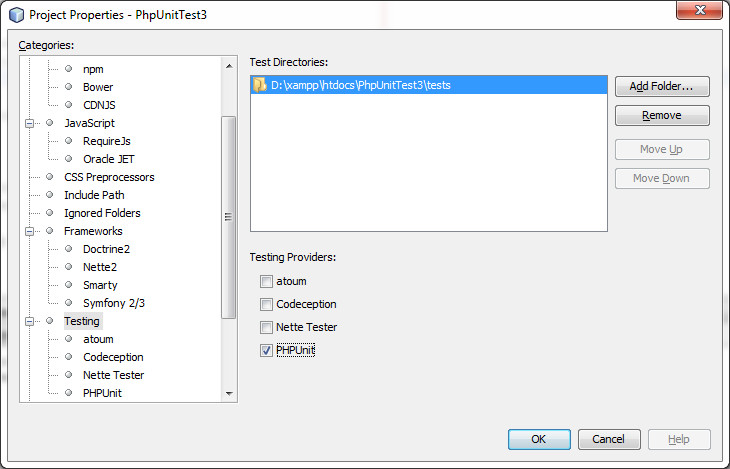

Setup for NGXLogger

Note: the setup below should use a single logger instance for both : Code Base and for Karma/Jasmine Tests!

import { TestBed, inject } from '@angular/core/testing';

import {LoggerConfig, LoggerTestingModule, NGXLogger} from 'ngx-logger';

fdescribe('InterceptService', () => {

beforeEach(() => {

TestBed.configureTestingModule({

imports: [

LoggerTestingModule

],

providers: [ NGXLogger, LoggerConfig,

... ]

});

});

fit(' should create and test NGXLogger !',

inject([NGXLogger], (logger: NGXLogger) => {

// config the Logger

logger.updateConfig({ level: NgxLoggerLevel.DEBUG });

// send a Test message from our Karma Code to the

logger.log('Logger Message from Karma Testing Module !');

// Override the logger instance from our production code

component.logger = logger;

// Invoke a function to test NGXLogger functionality works for our Code Base too !

component.syncSemsterData();

expect(component).toBeTruthy();

}));

});

Logger Output :

2019-08-13T11:37:46.298Z LOG [semester-goal-setting.component.spec.ts:95] Logger Message from Karma Testing Module !

2019-08-13T11:37:46.743Z LOG [semester-goal-setting.component.ts:130] syncSemsterData():: Subjects: 3 - all Marks Valid : true - saveButtonIsDisabled false

A complexer Sample mocking Services and Components

@Component({selector: 'app-semester-header', template: ''})

class SemesterHeaderStubComponent {}

@Component({selector: 'app-navigation', template: ''})

class NavigationStubComponent {}

export class MockDataService {

public isReady = true;

getIsReady(): boolean {

return this.isReady;

}

setAppStatusFailed(): void {}

getSemesterById(): Semester {

return new Semester(1, 20, 30 , false, true,

[ new Module('Mathematik', 8, 2.2, 2.0, 3.0, true),

new Module('JAVA Programming 2', 7, 2.2, 2.0, 3.0, true),

]);

}

}

describe('CurrentSemesterComponent', () => {

let component: CurrentSemesterComponent;

let fixture: ComponentFixture;

beforeEach(() => {

TestBed.configureTestingModule({

declarations: [ CurrentSemesterComponent, SemesterHeaderStubComponent, NavigationStubComponent],

imports: [

LoggerTestingModule,

RouterTestingModule,

NoopAnimationsModule

],

schemas: [NO_ERRORS_SCHEMA],

providers: [

NGXLogger,

LoggerConfig,

{provide: DataService, useValue: new MockDataService() } ]

});

fixture = TestBed.createComponent(CurrentSemesterComponent);

component = fixture.componentInstance;

fixture.detectChanges();

});

it('should create', () => {

expect(component).toBeTruthy();

});

/*

Whenever dataService is not ready setAppStatusFailed() should be called with systemError param

*/

it('if dataService not READY setAppStatusFailed() should be called with param \'systemError\' ', () => {

let dataService: MockDataService;

dataService = TestBed.get(DataService);

const spy = spyOn(dataService, 'setAppStatusFailed');

dataService.isReady = false;

component.ngOnInit();

expect(component).toBeTruthy();

expect(dataService.setAppStatusFailed).toHaveBeenCalledWith('systemError');

});

/*

Whenever dataService is READY setAppStatusFailed() should NOT be called anyway

*/

it('if dataService is READY setAppStatusFailed() should not have been called', () => {

let dataService: MockDataService;

dataService = TestBed.get(DataService);

const spy = spyOn(dataService, 'setAppStatusFailed');

dataService.isReady = true;

component.ngOnInit();

expect(component).toBeTruthy();

expect(dataService.setAppStatusFailed).not.toHaveBeenCalled();

});

});

Mock a Service Object

export class MockDataService {

public isReady = true;

getSemesterById(semId: number): Semester {

return new Semester( '20192' , 30, 0, '', 0,

[

new Mark( 4.5, 5, new Exam ( '6', 'Mathematik S1'), 3, true ),

new Mark( 2.5, 7, new Exam ( '5', 'JAVA Programming II S1'), 2, true ),

new Mark( 1.5, 8, new Exam ( '4', 'C ++ Programming II S1'), 2.7, true ),

])

}

}

fdescribe('SemesterGoalSettingComponent', () => {

let component: SemesterGoalSettingComponent;

let fixture: ComponentFixture;

beforeEach(async(() => {

TestBed.configureTestingModule({

declarations: [ SemesterGoalSettingComponent ],

schemas: [NO_ERRORS_SCHEMA],

imports: [ MatTableModule, MatDialogModule, RouterTestingModule, LoggerTestingModule , HttpClientModule,

HttpClientTestingModule, MatSnackBarModule],

providers: [ NGXLogger, LoggerConfig, MatSnackBar, MatDialog,

{ provide: DataService, useValue: new MockDataService() } ]

})

.compileComponents();

}));

...

Simulate a Button Press Action

Karma/Jasmine Test Code:

beforeEach(() => {

fixture = TestBed.createComponent(SemesterHeaderComponent);

component = fixture.componentInstance;

// 'autoDetectChanges' will continually check for changes until the test is complete.

// This is slower, but necessary for certain UI changes as changes triggered by ngFor Ops

fixture.autoDetectChanges(true);

});

it('Button Press should redirect to Central ECTS Page ', () => {

spyOn(component, 'navigateTop');

const el1 = fixture.debugElement.query(By.css('#return-to-top-level-page')).nativeElement;

el1.click();

expect(component.navigateTop).toHaveBeenCalled();

});

HTML Code:

<button id="return-to-top-level-page" class="mat-button-study-progress" mat-button (click)="navigateTop()" > <mat-icon class="button-navigate-back color_white" >arrow_back_ios</mat-icon> </button>

JavaScript Code:

navigateTop() {

this.logger.warn('SemesterHeaderComponent::navigate to ECTS Page !: ');

this.router.navigate(['ects']);

}